HDFS Basic Operations

Publish Date: 2022-07-06

Apache Hadoop project has developed open-source software for reliable, scalable, and efficient distributed computing. Hadoop Distributed File System (HDFS) is a distributed file system that stores data on low-cost machines, providing high aggregate bandwidth across the cluster (Shvachko et al. 2010)

In this article we will show you most common used commands to deal with HDFS filesystem in daily basis.

Get knowing Common HDFS Commands

start hadoop

before running any commands below, make sure that all instances of Hadoop JVM process are running, so first start hadoop by running the following commands:

$ start-dfs.sh

$ start-yarn.sh

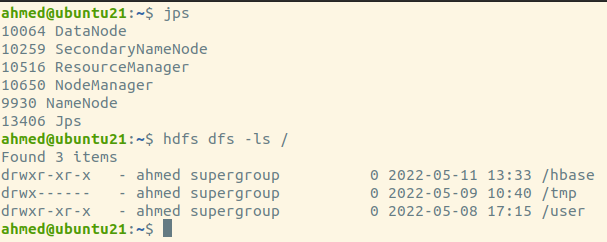

after that , check the running processes by executing jps command:

$ jps

44993 ResourceManager

45573 Jps

45128 NodeManager

44761 SecondaryNameNode

44538 DataNode

44383 NameNode

for the following commands, you can use the old command : "hdaoop fs" command syntax, but we will use the new one : "hdfs dfs" command , which is the recommended one.

How to get help

to get the general help , Run the help command.

if you want to just get help on specific command , for example let’s get help on how to use the ls command:

As you can see here all the possible and meaning of parameters that can be used with "ls" command.

List and display content

You can display the root (/) directory information , just by using ls command (you may get different output depend on your hadoop environment) :

$ hdfs dfs -ls /

Found 3 items

drwxr-xr-x - ahmed supergroup 0 2022-06-21 11:02 /hbase

drwx-w---- - ahmed supergroup 0 2022-05-23 21:12 /tmp

drwxr-xr-x - ahmed supergroup 0 2022-05-23 19:38 /user

as you can see here, there is 3 folders with the related information, our main focus will be the user folder in the next sections where we will put all of our data.

If we display the user folder, you should already have a folder with your username, in our case is ahmed (the username we choose for our demos):

$ hdfs dfs -ls /user

Found 2 items

drwxr-xr-x - ahmed supergroup 0 2022-05-23 19:38 /user/hive

drwxr-xr-x - ahmed supergroup 0 2022-05-25 13:02 /user/ahmed

if we look inside more on that folder, we get all of our data created by us:

$ hdfs dfs -ls /user/ahmed

Found 3 items

drwxr-xr-x - ahmed supergroup 0 2022-05-26 21:05 /user/ahmed/data

drwxr-xr-x - ahmed supergroup 0 2022-05-23 18:41 /user/ahmed/input

drwxr-xr-x - ahmed supergroup 0 2022-05-23 19:09 /user/ahmed/output2

by the way , you can get the same output result of previous command by just running this command without specifying the full path every time:

$ hdfs dfs -ls

Found 3 items

drwxr-xr-x - ahmed supergroup 0 2022-05-26 21:05 data

drwxr-xr-x - ahmed supergroup 0 2022-05-23 18:41 input

drwxr-xr-x - ahmed supergroup 0 2022-05-23 19:09 output2

create folders

the mkdir command is used to create directories in HDFS. Let’s say we want to create a folder named datasets , which will be used to store our files, we can use the next command to do so :

$ hdfs dfs -mkdir /user/ahmed/datasets

$ hdfs dfs -ls

Found 4 items

drwxr-xr-x - ahmed supergroup 0 2022-05-26 21:05 data

drwxr-xr-x - ahmed supergroup 0 2022-07-08 15:30 datasets

drwxr-xr-x - ahmed supergroup 0 2022-05-23 18:41 input

drwxr-xr-x - ahmed supergroup 0 2022-05-23 19:09 output2

or without specifying the full path, which basically will have the same effect as that:

$ hdfs dfs -mkdir datasets

put data into HDFS

you can upload files from your local Linux system to a specified HDFS directory. Let’s say we have a file named ‘file1.txt’ with the following content:

id,name,age

1,ahmed, 25

2,amine,19

3,sara,22

4,ali,27

now, run the next command to upload this file into HDFS:

$ hdfs dfs -put file1.txt /user/ahmed/datasets

Run the ls command to check whether the file1.txt file has been uploaded to the /user/stu01 directory:

$ hdfs dfs -ls /user/ahmed/datasets

Found 1 items

-rw-r--r-- 1 ahmed supergroup 47 2022-07-08 21:08 /user/ahmed/datasets/file1.txt

you can also use copyFromLocal to copy data to HDFS, which is Identical to the -put command. We can copy the file with overwriting the existing one (already copied from previous command ) in HDFS by adding the -f flag :

$ hdfs dfs -copyFromLocal -f file1.txt /user/ahmed/datasets

Read content in HDFS

to display the HDFS file content, just use a command

$ hdfs dfs -cat /user/ahmed/datasets/file1.txt

id,name,age

1,ahmed, 25

2,amine,19

3,sara,22

4,ali,27

you can also use a text command to achieve the same thing, and show the content of a file in character format:

$ hdfs dfs -text /user/ahmed/datasets/file1.txt

id,name,age

1,ahmed, 25

2,amine,19

3,sara,22

4,ali,27

the allowed format of files passed to text command are: zip, TextRecordInputStream and Avro

move data into HDFS

In some cases you might have to move data into HDFS instead of just copying it, in that case , you can use the moveFromLocal command to cut-and-paste data from the local PC to HDFS.

let’s say we have a file2.tx with this content:

id,city

1,annaba

2,oran

3,alger

4,adrar

to move the file to HDFS:

$ hdfs dfs -moveFromLocal file2.txt /user/ahmed/datasets/

The file2.txt file has been uploaded to the /user/ahmed/datasets/ directory on the HDFS:

$ hdfs dfs -ls /user/ahmed/datasets

Found 2 items

-rw-r--r-- 1 ahmed supergroup 53 2022-07-19 16:29 /user/ahmed/datasets/file1.txt

-rw-r--r-- 1 ahmed supergroup 32 2022-07-19 16:32 /user/ahmed/datasets/file2.txt

and if you check you local filesystem, The file2.txt file is no longer exist.

copy files inside HDFS

you can also copy a file from one HDFS path to another HDFS path. Let’s say we have a file3.txt in data folder in HDFS, and we want to make a copy of it into datasets folder in HDFS:

$ hdfs dfs -cp /user/ahmed/data/file3.txt /user/ahmed/datasets/

check again in datasets folder, you will see a third file file3.txt is available:

$ hdfs dfs -ls /user/ahmed/datasets

Found 3 items

-rw-r--r-- 1 ahmed supergroup 53 2022-07-19 16:29 /user/ahmed/datasets/file1.txt

-rw-r--r-- 1 ahmed supergroup 32 2022-07-19 16:32 /user/ahmed/datasets/file2.txt

-rw-r--r-- 1 ahmed supergroup 30 2022-07-19 16:50 /user/ahmed/datasets/file3.txt

move files inside HDFS

if you prefer to move some files or folders from one path to another in HDFS, you can use the mv command. Let’s say we are no longer needs file3.txt, we create a backup foldr and move to it the file3 :

$ hdfs dfs -mkdir backup

$ hdfs dfs -mv /user/ahmed/datasets/file3.txt /user/ahmed/backup

The file3.txt file is now exists in the /user/ahmed/backup1 folder, but it has been removed from the /user/ahmed/datasets directory:

$ hdfs dfs -ls /user/ahmed/datasets

Found 2 items

-rw-r--r-- 1 ahmed supergroup 53 2022-07-19 16:29 /user/ahmed/datasets/file1.txt

-rw-r--r-- 1 ahmed supergroup 32 2022-07-19 16:32 /user/ahmed/datasets/file2.txt

$ hdfs dfs -ls /user/ahmed/backup

Found 1 items

-rw-r--r-- 1 ahmed supergroup 30 2022-07-19 16:50 /user/ahmed/backup/file3.txt

Get files from HDFS to local

you can download files from HDFS to local filesystem , by simply using the get (you can also use copyToLocal which is the same) command followed by the path of file or directory (the -f flag to replace the file in local filesystem if already exists) :

$ hdfs dfs -get -f /user/ahmed/datasets/file1.txt .

Note: the period at the end of the HDFS command, which indicates the current directory.

If you want to specify another directory, you can specify the path for saving the file.

Delete data in HDFS

You can use the rm command to delete an HDFS file or folder. for example, if you want to rm file3.txt , you would use this command:

$ hdfs dfs -rm /user/ahmed/backup/file3.txt

Deleted /user/ahmed/backup/file3.txt

if you want to remove a directory, along with its content, you would use the next command:

$ hdfs dfs -rm -r -f /user/ahmed/datasets

Deleted /user/ahmed/datasets

Summary

there is so more commands to cover for dealing with HDFS. but for the basics, we get covered the most important ones. and by completing all the above examples, you will be able to perform most common HDFS operations for daily basis.